Machine Learning

Machine learning enables annotators to more efficiently parse through large sets of underwater imagery. As opposed to going in blind, annotators can harness the power of a pre-trained machine learning algorithm to detect unknown objects.

Currently in TATOR, SOI has enabled one ML model called Megalodon. Megalodon is an object detection model with a Ultralytics YOLOV8x backbone, trained by researchers at the Monterey Bay Aquarium Research Institute (MBARI). It harnesses the FathomNet localization database to fine-tune results to detect 1 class, thus being useful to construct a localized set of images, where neither training data nor a model exists for the applicable imagery. In simpler terms, this is a general object detection model for underwater imagery.

In the future, we aim to include additional models, including other variants of the YOLO series, RT-DETR, and more species-specific models like sea urchin detectors. Machine learning models can assist with a variety of tasks including classification and tracking, but our initial set of models are primarily focused on object detection.

Using Pre-trained Models

In TATOR, there are two structures for engagement with machine learning models: automated & interactive. The automated ML models are first run on video import when new cruise footage is uploaded into TATOR, then re-run once every quarter to improve existing results. The interactive ML models are “human-in-the-loop” learning models where the outputs of the model are constantly being fine-tuned based on previous manual detection. Thus, as a user, you can interactively use and train models.

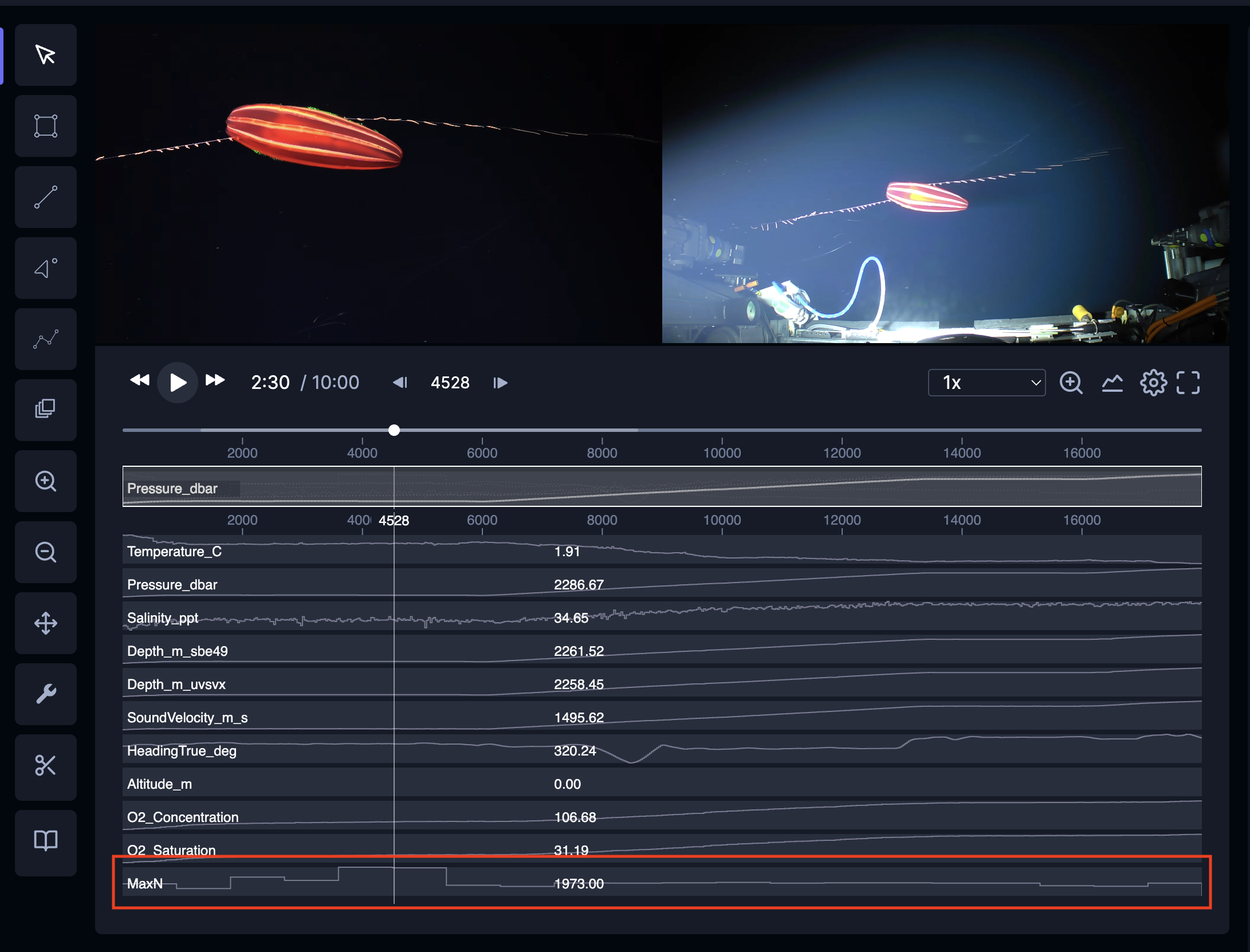

Given Megaladon’s size and general-purpose nature, it will be utilized as an automated model. TATOR will automatically run the model immediately after uploading new dive footage into the platform. Using the model, we will detect the number of potential objects in each individual frame, developing a MaxN count for each frame. Beneath the video, in the observation tracks, you will be able to view these counts in a graphical visualization.

Using the MaxN feature

The goal of the MaxN feature is to help you observe where a video has high activity or a high concentration of AI-detected objects. Given that we are using innovative and cutting-edge models, we cannot ensure that the results are 100% accurate. However, the intent of this feature is to assist in your annotation process by providing “hotspots” in the videos to reduce the need to watch the full duration of a video.

You may notice that the observation tracks have consistent values for every 30-seconds of video (appearing like a discrete step function as opposed to continuous). We have decided to group the number of detections in 30-seconds bins in order to convey these “hotspots”, as opposed to an accurate detection and false positive carrying the same weight on the observation track.

To find the tracks, click the “graph” icon underneath the video for a series of observaton tracks to appear. The one titled “MaxN”, often found at the bottom of the tracks, is the relevant one for this feature. Using this feature may be initially confusing, but begin by seeking to the tallest bars on the MaxN track in order to quickly find potential species.

This feature is currently available on the following cruises: FKt230602, FKt231202, FKt240224. In the future, we will be expanding the MaxN capabilities to other cruises, but we wanted to test it out on a few videos to start.

Note

In developing TATOR’s machine learning capabilities, we prioritized selecting an AI tool that not only delivered reliable results but also minimized computational demands. This decision was driven by SOI’s commitment to sustainable practices, recognizing the environmental impact of high-performance computing. Training and deploying machine learning models, especially those with large-scale architectures, can result in significant energy consumption. By choosing tools that balance effectiveness with efficiency, we aim to reduce our carbon footprint while maintaining high standards of useful, user-friendly tools.